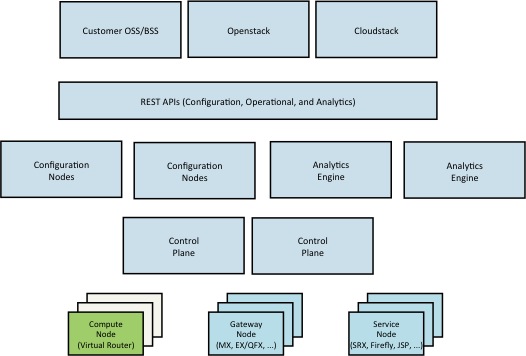

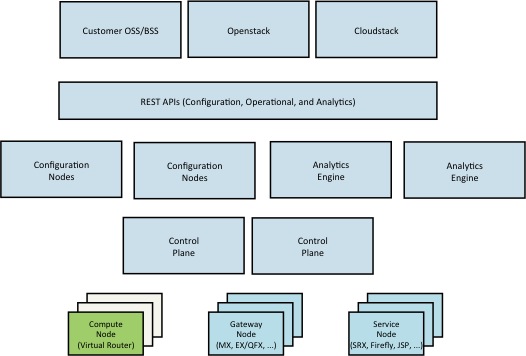

The Contrail Virtual Network Controller (VNC) is composed of the

following components:

Configuration node

The configuration node provides a REST API that allows an orchestration system to define virtual-networks, virtual-interfaces and network policies that control the flow of traffic between virtual-networks. The configuration node is responsible to store the persistent state of the system that defines the desired state of the network.

Control node

The control node implements a distributed database that contains

the ephemeral state of the system. Control nodes federate with each

other using the BGP routing protocol.

BGP is also used to communicate with network virtualization enabled routers that support the BGP/MPLS L3VPN protocol.

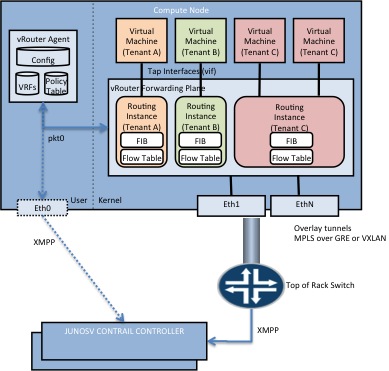

Control nodes distribute network reachability information to compute-nodes using the XMPP protocol. The same channel is used to distribute desired network configuration state such as the properties of virtual-network interfaces instantiated in a specific compute-node.

Analytics engine

The network analytics engine collects information such as network flow records from the compute-nodes and makes them available via a REST API. Additionally each of the contrail components periodically reports status information about its state and the state of primary data objects in the system.

Compute node

The compute node includes both a user space process (agent) as well as dataplane component that executes as a loadable kernel module on the Host operating system.

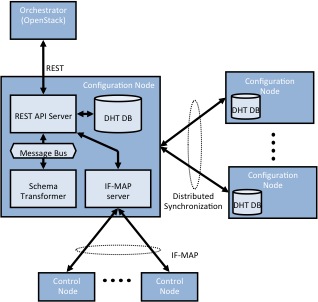

Configuration node

The IF-MAP specification defines a set of base operations that can be used to store and query an object graph. The contrail VNC defines an object model (vnc_cfg.xsd)

that expresses network virtualization concepts as IF-MAP identifiers and metadata relations.

The configuration node APIs are autogenerated from this schema.

Several APIs expose higher level concepts that need to be translated to lower level objects. For instance a network-policy may result in both an "access-control list" (ACL) as well as importing network reachability across virtual-networks. The ACL is implemented by the data-plane, while the routing information import/export function is implemented by the control-node. The "schema-transformer" process performs this function whenever necessary.

The configuration node is composed of multiple processes:

API server

The API server is responsible for persisting the state of

the schema objects as well as publishing the corresponding information in the IF-MAP server. It also implements additional functionality such as

IP address management.

Schema transformer

The schema transformer converts higher level concepts into low level objects that can be implemented by the network. For instance it allocates

one or more

routing-instance

objects for a virtual-network. Routing instances correspond to VRF in the dataplane.

Service monitor

The service monitor process creates and monitors virtual-machine instances that implement network services such as NAT or firewalls.

Discovery Service

The discovery service publishes the IP address and port of

the multiple components of the configuration node. The system run multiple instance of each process for high-availability and load balancing purposes.

Domain Name (DNS) Server

Multi-tenant aware DNS server.

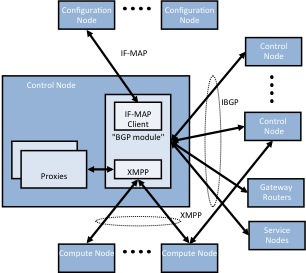

Control node

The control-node implements an in-memory distributed database that

contains the transient state of the network such as the current

network reachability information.

For each virtual-network defined at the configuration level, there is

exists one or more routing-instances that contain the corresponding

network reachabilibity. A routing-instance contains the IP host routes

of virtual-machines in the network as well as the routing information

corresponding to other virtual-networks to which virtual-machines are

allowed to communicate directly with.

Control-node processes federate with each other using the BGP protocol and specifically the BGP/MPLS L3VPN extensions. The same protocol is used to interoperate with physical routers that support network virtualization.

The control-node also contains an IF-MAP subsystem which is

reponsible to filter the configuration database and propagate to

the compute node agents only the subset of configuration information

that is relevant to them.

Communication between the control-node and the agent is done via

an XMPP-like control channel. While the message format follows the

XMPP specification the state machine does not yet. This will be

corrected whenever possible.

The control-node receives "subscription" messages from the agent

for routing information on specific routing-instances and for

configuration information pertaining to virtual-machines instantiated

in the respective compute node. It then pushes any current information

as well as updates to both routing and configuration objects relevant

to each agent.

Each agent connects to two control-nodes in an active-active model.

All the routing functionality in the system happens at the

control node level. The agent is unaware of policies that influence

which routes are present in each routing table.

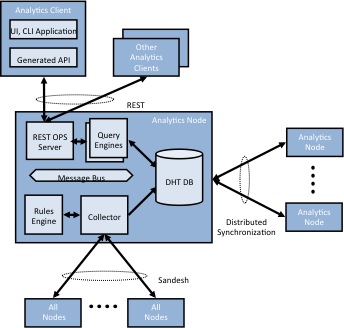

Analytics Engine

The Analytics node provides a REST interface to a time series database

containing the state of various objects in the system such as

virtual-networks, virtual-machines, configuration and control nodes

as well as traffic flow records.

The collected data is stored in a DHT/NoSQL distributed database

(Apache Cassandra)

for scale-out.

The collected objects are defined using an IDL so that the schema of database records can

be easily communicated to users or applications performing queries.

This IDL unifies all diagnostics information on the system including data available through the HTTP interface provided by each contrail process.

Information can be collected periodically or based on event triggers.

Compute node

The compute-node is composed of the vRouter "agent" and

"datapath".

The datapath runs as a loadable kernel module on the host (or dom0)

operating system.

The agent is a user-space process that communicates with the control node. It runs a thrift service that is used by the compute node plugin (e.g.

nova or XAPI) to enable the virtual-interface associated with a

virtual-machine instance.

The dataplane associates each virtual interface with a VRF table

and perfoms the overlay encapsulation functionality. It implements

Access Control Lists (ACLs), NAT, multicast replication and mirroring.